If you work in SEO long enough, you eventually run into technical problems you can’t see from the surface. That’s where tools like screaming frog come in. It’s not flashy. Not trendy. But it quietly powers some of the most serious SEO site audits happening behind the scenes every day.

What is Screaming Frog?

It is a technical SEO crawler that scans websites and finds issues affecting search performance.

Is Screaming Frog worth using?

Yes, especially for technical SEO professionals, agencies, and site owners managing large websites.

How does Screaming Frog work?

It crawls website URLs like a search engine bot and collects technical data for analysis.

SEO Software and Technical Site Intelligence

Modern SEO software is no longer just about keywords and rankings. Today, search visibility depends heavily on technical performance, crawlability, and site structure. Tools that analyze website architecture are becoming essential rather than optional. Many websites lose rankings not because content is weak, but because technical signals confuse search engines. That’s where advanced crawling tools step in. They simulate search engine bots and expose hidden problems. From broken links to duplicate content, technical SEO tools provide clarity. Businesses using strong SEO software — including platforms accessed through Semrush login — often gain stability in rankings because they fix structural problems before competitors even notice them.

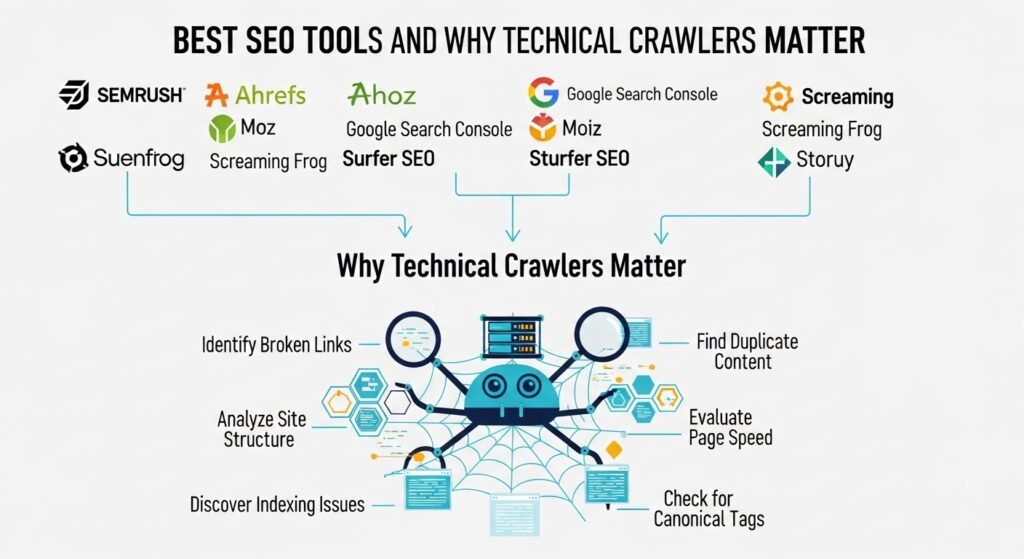

Best SEO Tools and Why Technical Crawlers Matter

The best SEO tools today combine multiple capabilities: keyword tracking, competitor research, and technical audits. But technical crawlers still hold a unique place in the stack. They don’t guess, They don’t estimate. They show real site structure data. Many SEO professionals pair crawlers with keyword platforms for complete visibility. Technical SEO often gets ignored until traffic drops. Then suddenly everyone wants answers. Crawlers help prevent that scenario. They act like a health scanner for websites. Instead of reacting to ranking losses, businesses can proactively monitor their technical performance and fix issues early.

Search Engine Land Insights and Industry Adoption

Publications like Search Engine Land often highlight technical SEO as a foundation layer for organic growth. Trends show increasing reliance on crawling tools for enterprise websites. Large platforms with thousands of pages cannot rely on manual inspection. Automation becomes necessary. Industry experts frequently mention technical audits as the first step in any recovery plan. Search algorithm updates often impact site structure signals. Businesses that regularly crawl their sites respond faster. Those who don’t usually struggle to identify root causes. The industry shift is clear. Technical SEO is now core strategy, not a secondary task.

SEO Site Audit Fundamentals in Modern Optimization

A modern SEO site audit goes far beyond checking title tags and meta descriptions. It includes analyzing site speed signals, indexation patterns, internal linking structures, and redirect chains. Crawling tools help visualize how search engines move through a site. This perspective is powerful. Many site owners think their site structure is logical. Crawlers often prove otherwise. Technical audits help identify orphan pages, duplicate URLs, and crawl traps. Fixing these problems improves search engine efficiency. That efficiency often translates directly into better indexing and visibility. Technical clarity supports content strategy. It doesn’t replace it, especially when supported by experienced teams like digital SEO strategy specialists.

Screaming Frog SEO Spider and Crawling Technology

The Screaming Frog SEO Spider works by crawling websites similarly to how search engine bots do. It follows links, scans page data, and builds a complete technical map of a website. The tool collects metadata, status codes, canonical tags, and structured data signals. This information becomes essential during audits. Agencies often use it to diagnose sudden ranking drops. Developers use it during migrations. Content teams use it to identify duplicate issues. The tool scales well for large websites. That scalability is why many enterprise SEO teams rely on it for routine technical monitoring and deep investigation.

SEO Software Tools and Integrated SEO Workflows

SEO software tools increasingly operate as part of integrated marketing workflows. Technical crawlers feed data into reporting dashboards, keyword platforms, and analytics tools. This creates a unified view of website performance. Businesses no longer treat SEO tasks as isolated processes. Everything connects. Technical health influences content performance. Content performance influences backlink growth. Crawlers help identify technical barriers blocking SEO success. Integration is the future of SEO workflows. Teams that connect technical data with content strategy usually outperform teams working in silos. Data becomes actionable rather than overwhelming.

Analyse SEO Site Data for Strategic Decisions

To analyse SEO site performance correctly, teams must move beyond surface metrics like traffic and impressions. Crawling data reveals structural patterns affecting long-term rankings. Index bloat, redirect chains, and duplicate parameters can quietly damage SEO performance. These issues rarely show in basic analytics dashboards. Crawlers uncover them. Once identified, teams can prioritize fixes based on impact. Some technical issues cause immediate ranking problems. Others create slow performance decay over time. Understanding the difference matters. Strategic SEO relies on technical clarity first, then optimization decisions follow naturally.

Screaming Frog vs Ahrefs: Technical vs Data Intelligence

The discussion around screaming frog vs ahrefs usually centers on purpose rather than quality. Ahrefs focuses on backlink intelligence, keyword tracking, and competitor analysis. Crawlers focus on technical structure. Both solve different problems. Some businesses try using only one tool. That usually creates blind spots. Technical issues require crawling. Authority growth requires backlink analysis. Mature SEO strategies combine both. Technical health supports content growth. Content growth supports backlink acquisition. SEO success rarely comes from a single tool. It comes from combining multiple data perspectives into one strategy.

SEO App Ecosystems and Technical Tool Integration

SEO apps now function as connected ecosystems rather than standalone platforms. Crawlers export data into visualization dashboards and reporting systems. Technical insights become easier to communicate to stakeholders. Decision-making speeds up. Teams can prioritize development tasks based on SEO impact. Technical SEO used to feel complex. Modern tools simplify interpretation. Visualization dashboards translate raw crawl data into actionable insights. This shift makes technical SEO accessible to marketing teams, not just developers. Collaboration improves. Strategy becomes clearer. Execution becomes faster.

Website SEO Audit Workflow for Agencies

Agencies often build structured website SEO audit workflows using crawlers as the starting point. The first crawl creates a baseline technical snapshot. That snapshot guides the rest of the audit process. After technical analysis, teams move into content evaluation and backlink analysis. The workflow prevents wasted time. Instead of guessing what might be wrong, teams follow data-driven priorities. Clients appreciate structured reporting. It builds trust. Technical audits also provide measurable progress benchmarks. Improvements can be tracked over time. Data replaces assumptions. Strategy becomes repeatable and scalable.

Best SEO Tool Selection for Different Business Types

Choosing the best SEO tool depends on business size, site complexity, and marketing goals. Small blogs may rely more on keyword tools. Enterprise sites require technical crawlers. E-commerce platforms often need both. Technical errors scale quickly on large sites. One indexing problem can affect thousands of product pages. Crawlers help identify systemic problems early. Businesses investing in technical tools often see long-term ranking stability. Short-term ranking gains are unpredictable. Technical health creates sustainable growth foundations. That consistency matters more than short bursts of traffic spikes.

Search or Type URL Behavior and Crawl Discovery

Search or type URL user behavior indirectly influences crawl efficiency. Direct traffic patterns signal brand strength. Internal navigation structure influences crawler discovery. Poor linking structures create hidden content zones. Crawlers help identify navigation weaknesses. Improving internal linking often improves crawl efficiency. Crawl efficiency improves indexing speed. Indexing speed improves ranking opportunities. Small structural improvements can create measurable organic traffic growth. Technical SEO is often about removing friction. Not adding complexity. Simplification usually improves search performance.

TheArticleSpot and Technical SEO Content Authority

Platforms like TheArticleSpot, which publish AI and IT-focused content, benefit heavily from technical SEO audits. Technical clarity helps content rank faster and stay indexed longer. Technical structure supports content discoverability. Publishing platforms often produce large content libraries. Without technical audits, content may remain undiscovered. Crawlers help content publishers maintain site structure health. That technical consistency supports long-term organic growth. Content quality matters. But technical accessibility determines whether content gets discovered in the first place.

Technical SEO Crawl Data Overview

| Technical Element | Why It Matters | SEO Impact |

|---|---|---|

| Status Codes | Shows crawl errors | Prevents indexing issues |

| Meta Data | Controls search snippets | Improves CTR |

| Canonical Tags | Prevents duplicate indexing | Preserves authority |

| Internal Links | Guides crawler navigation | Improves crawl depth |

| Page Speed Signals | Influences ranking | Improves UX & SEO |

Full FAQ Section

1. Is Screaming Frog free?

There is a free version with crawl limits and a paid version for larger sites.

2. Who should use technical crawlers?

SEO professionals, agencies, developers, and large website owners.

3. How often should I run a crawl audit?

Monthly for small sites, weekly or continuous for large sites.

4. Can beginners use technical crawlers?

Yes, but learning basic SEO concepts helps maximize value.

5. Does crawling improve rankings directly?

Not directly, but fixing issues found during crawling often improves rankings.

6. Is technical SEO more important than content?

Both matter. Technical SEO enables content to perform properly.

7. Can crawling detect security issues?

Sometimes indirectly, especially misconfigured redirects or indexing problems.

8. Do search engines crawl like SEO tools?

SEO crawlers simulate search bots but cannot replicate them perfectly.

Technical SEO rarely feels exciting. No viral moments. No sudden traffic explosions. Just steady improvements. Quiet fixes. Gradual clarity.

You crawl, You fix, You monitor, You repeat.

And over time… rankings stabilize. Visibility improves. Things just start working better.